For embedded applications, DIY enthusiasts and researchers, it has become easier and easier to integrate AI technology into products or works. After all, face detection, object presence detection, head counting and various computer vision related applications are very popular and quite common. The ready-made sensor, processor, computing power support, and even the software and library supported by the algorithm are becoming more and more perfect. Even NVIDIA, which mainly occupies the cloud AI market, launched a $99 Jetson nano developer kit at the 2019 GTC Conference

In the past two years, we have participated in many college students' electronic competitions and other competitions. Board sponsors of these competitions are more and more inclined to provide development boards with AI acceleration units. Organizers such as colleges and universities also encourage students to integrate AI technology into competition works as much as possible, and even add it into the competition rules as a hard rule. For example, at the end of last year, we participated in the National College Students' electronic design competition, with Renesas as the sponsor, The board provided is the product with AI image processing acceleration unit - and the competition is intended to highlight this feature itself.

This has a lot to do with the general trend of aiot or edge AI. The integration of AI technology, especially computer vision technology, in this kind of student competition shows that the development threshold of adopting AI technology is not high to a certain extent, or the AI technology represented by computer vision application is maturing.

For embedded applications, DIY enthusiasts and researchers, it has become easier and easier to integrate AI technology into products or works. After all, face detection, object presence detection, head counting and various computer vision related applications are very popular and quite common. The ready-made sensor, processor, computing power support, and even the software and library supported by the algorithm are becoming more and more perfect.

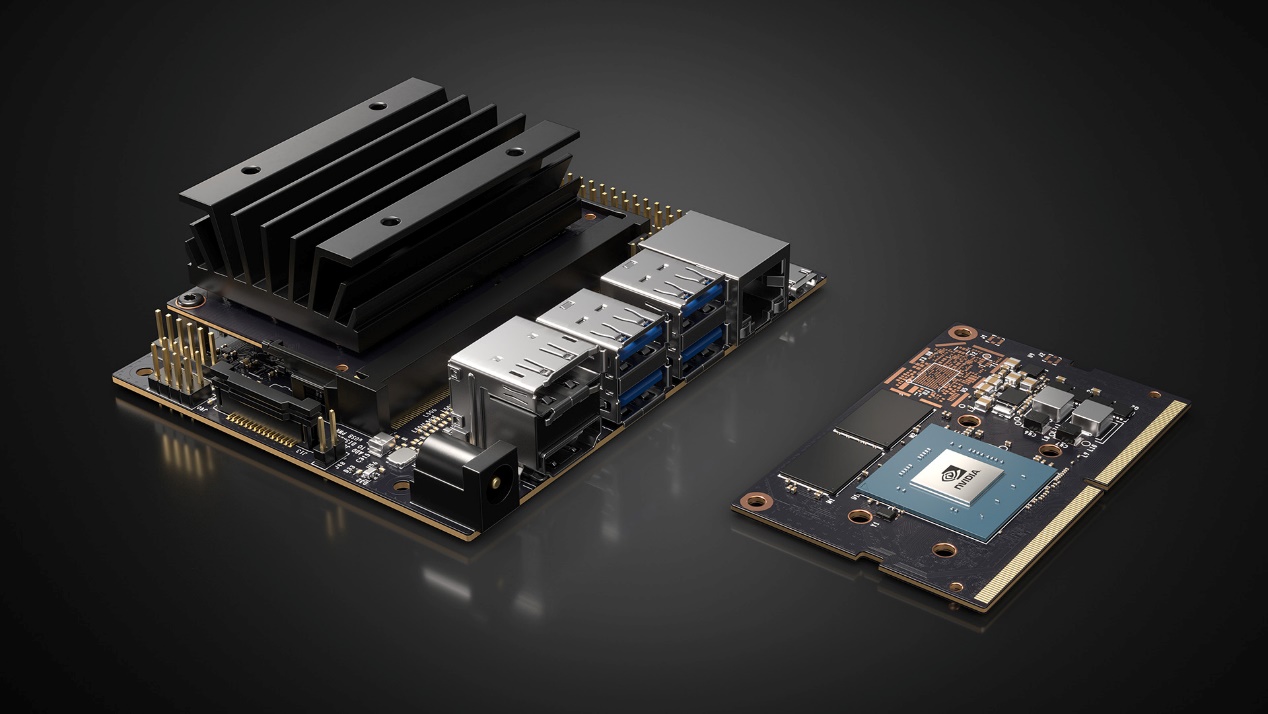

Even NVIDIA, which mainly occupies the cloud AI market, launched a $99 Jetson nano developer kit at the 2019 GTC Conference - it doesn't look much like the style of NVIDIA we understand. This type of product is valuable for AI related algorithm research and AI prototype product testing.

For embedded applications, DIY enthusiasts and researchers, it has become easier and easier to integrate AI technology into products or works. After all, face detection, object presence detection, head counting and various computer vision related applications are very popular and quite common. The ready-made sensor, processor, computing power support, and even the software and library supported by the algorithm are becoming more and more perfect. Even NVIDIA, which mainly occupies the cloud AI market, launched a $99 Jetson nano developer kit at the 2019 GTC Conference

In the past two years, we have participated in many college students' electronic competitions and other competitions. Board sponsors of these competitions are more and more inclined to provide development boards with AI acceleration units. Organizers such as colleges and universities also encourage students to integrate AI technology into competition works as much as possible, and even add it into the competition rules as a hard rule. For example, at the end of last year, we participated in the National College Students' electronic design competition, with Renesas as the sponsor, The board provided is the product with AI image processing acceleration unit - and the competition is intended to highlight this feature itself.

This has a lot to do with the general trend of aiot or edge AI. The integration of AI technology, especially computer vision technology, in this kind of student competition shows that the development threshold of adopting AI technology is not high to a certain extent, or the AI technology represented by computer vision application is maturing.

For embedded applications, DIY enthusiasts and researchers, it has become easier and easier to integrate AI technology into products or works. After all, face detection, object presence detection, head counting and various computer vision related applications are very popular and quite common. The ready-made sensor, processor, computing power support, and even the software and library supported by the algorithm are becoming more and more perfect.

Even NVIDIA, which mainly occupies the cloud AI market, launched a $99 Jetson nano developer kit at the 2019 GTC Conference - it doesn't look much like the style of NVIDIA we understand. This type of product is valuable for AI related algorithm research and AI prototype product testing.

Some of the more general development boards, even if there is no AI core, can also improve the computing efficiency by supporting Tengine - a lightweight modular neural network information engine, such as Ruixin micro rk3399 development board (rock960); The advanced rk3399 Pro adds a special NPU; Another example is the Jingchen a311d development board. The a311d chip itself also integrates a special NPU; You will also see Google edge TPU and Intel myriad x below

The difference between Jetson nano and these products is mainly the continuation of NVIDIA's tradition of using GPU for AI computing (and these boards are really not cheap). This is the difference in thinking between NVIDIA and these products. However, the Jetson nano, including the 2GB version, supports CUDA under its small body, which is still quite incredible (the current price of Taobao seems to be about 430 yuan).

On AI performance of edge computing

The main configurations of the Jetson nano 2G development board include four 1.43ghz arm cortex-a57 cores in the CPU, NVIDIA Maxwell (the previous generation of geforce 10 series Pascal Architecture) with 128 CUDA cores in the GPU, and 472gflops in semi-precision floating-point performance. Compared with more dedicated VPUs such as myriad X and the above npus, it is still inferior. However, it is still countless times stronger than CPU, and NVIDIA's unmatched AI development ecology can ignore the leading performance of competing products in pure hardware to some extent. This will be mentioned later when talking about ecology.

From this configuration, it is not difficult to see that nano is more focused on low-power and low-cost IOT on NVIDIA's Jetson family product line, and is mainly positioned in information. Although from the introduction of NVIDIA, it seems that it can also be used for AI training.

Other configurations of this board also include 2GB 64 bit lpddr4 with a bandwidth of 25.6gb/s; Video coding supports up to 4k30, 4-channel 1080p30, video decoding up to 4k60, 8-Channel 1080p30; One Mipi csi-2 camera interface (supporting raspberry PI camera module, Intel real sense and many camera modules including imx219 CIS), as well as USB, HDMI, Gigabit network port, wireless adapter, microSD slot (as local storage), etc. other I / O related configurations will not be repeated. The $99 4G memory version of the Jetson nano development board will be richer in I / O resources (including the m.2 interface with an additional wireless network card) in addition to larger memory.

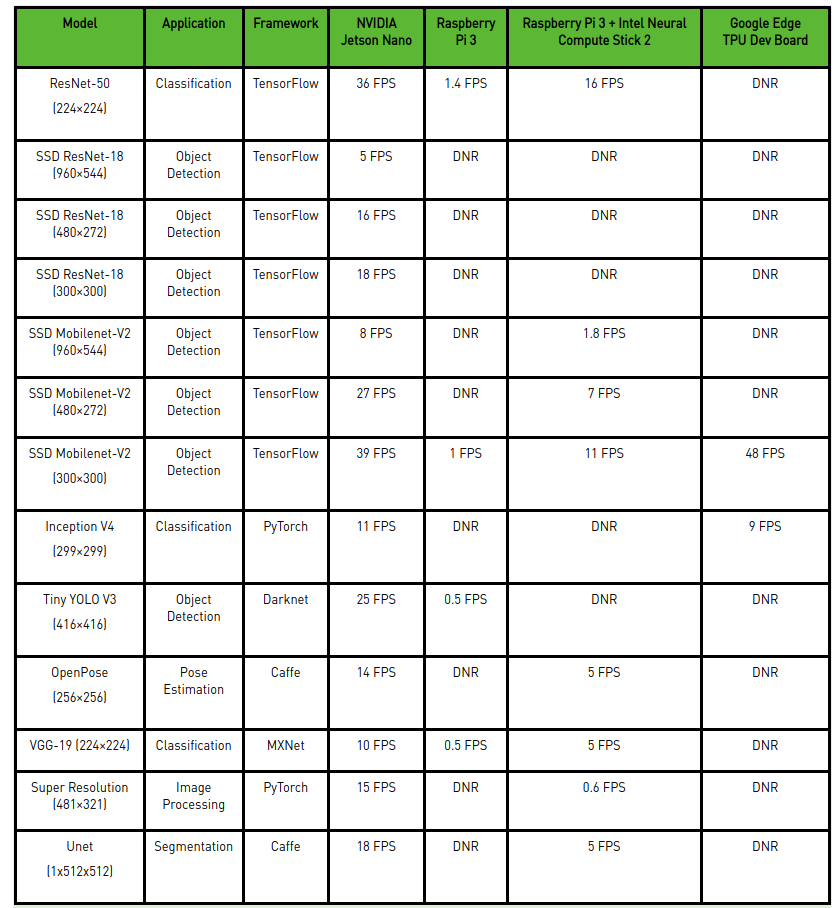

NVIDIA has a special table to compare the real-time information performance (batch size 1, precision fp16) of Jetson nano, raspberry PI 3, raspberry PI 3 + Intel neural compute stick 2 (integrated with myriad x) and Google edge TPU development board under different models of application video streaming, as shown in the above figure (Note: the comparison is with the 4GB Jetson nano).

In fact, compared with raspberry PI, the rolling advantage and slightly weaker performance than TPU are expected - this is the difference between general-purpose and special-purpose (compared with myriad X's leadership, it may reflect the ecological ability). However, there are many items 'DNR' in this table It refers to did not run, which may be due to limited memory capacity, no support for network layer or other software and hardware restrictions. What NVIDIA wants to convey in this part is that the universality of GPU will be significantly better.

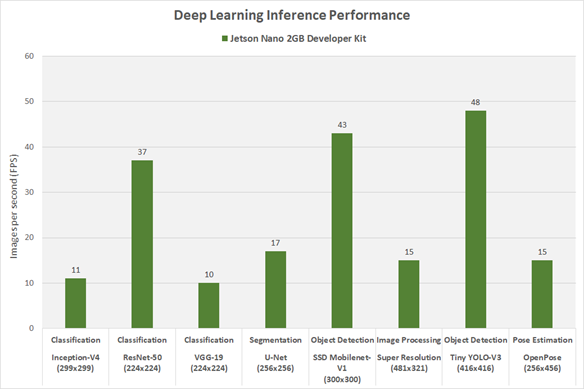

The performance of the 2GB Jetson nano under the mainstream model is as follows:

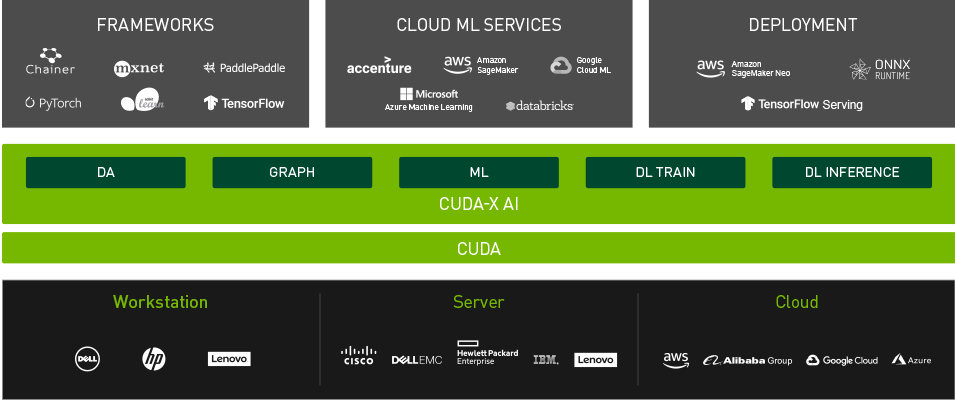

When AI starts talking about ecology

When it comes to versatility, we have to say that NVIDIA has almost no rival in the ecological construction progress in the AI field. NVIDIA has mentioned more than once that AI computing science is developing rapidly, researchers are rapidly inventing new neural network architecture, and AI researchers or practitioners use a variety of AI models in the project. 'So for learning and building AI projects, the ideal platform should be able to run a variety of AI models, have enough flexibility, and provide enough performance to build a meaningful interactive AI experience.'

Mainstream ml frameworks, including tesnorflow, pytorch, Caffe, keras, etc., are not mentioned. The above table gives the popular DNN model, which is supported by Jetson nano. It includes relatively complete framework support, memory capacity, unified memory subsystem and various software support, which is the basis for realizing universality. Moreover, not only DNN information, the CUDA architecture of Jetson nano can be used for a wider range of computer vision and DSP, including various algorithms and operations.

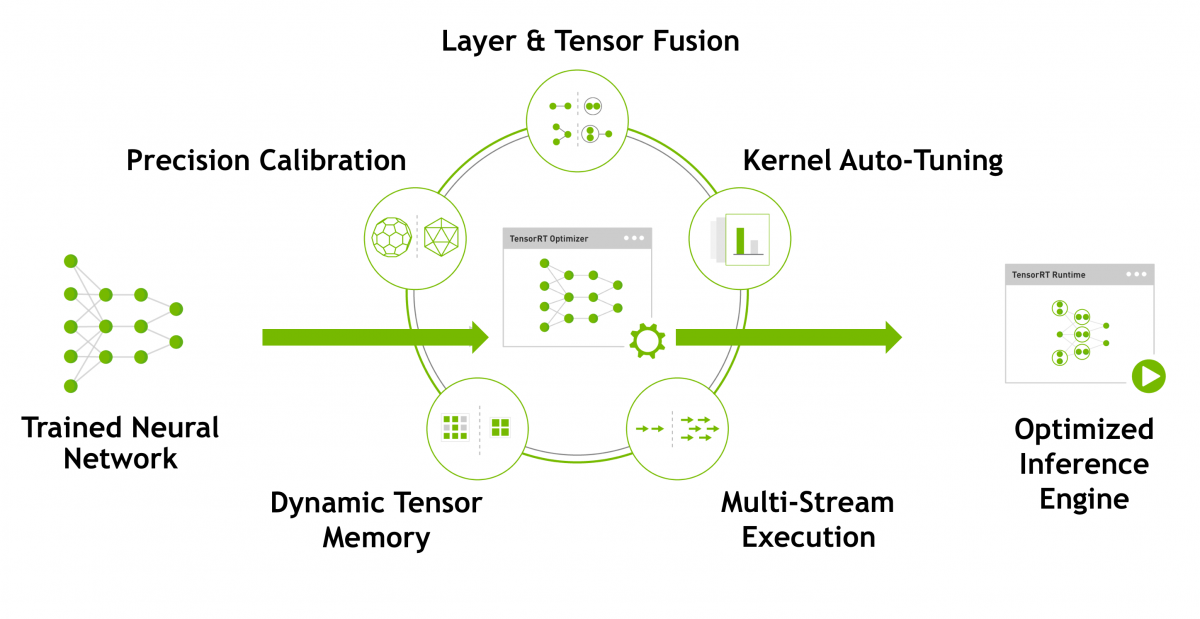

It is particularly worth mentioning that the above models are implemented on the Jetson nano with tensorrt. Students familiar with NVIDIA AI ecology should know that tensorrt is a middleware pushed by NVIDIA. After inputting the model, CUDA GPU can generate the optimized model runtime through tensorrt, which is an important part of NVIDIA's AI computing performance optimization.

As a kind of software, tesnorrt is an important part to achieve higher frame rate and higher computational efficiency in the previous example. Some researchers have tested that a version of the cafe model is directly compiled on the Jetson nano 2G without tensorrt optimization. Although the problem is not big, the version accelerated by tensorrt will greatly improve the efficiency.

From tensorrt, on the one hand, NVIDIA talks about whether it is not or not just a chip company every year. On the other hand, it is also a glimpse of the ecological resources of Jetson nano backed by NVIDIA.

In short, all the hardware platforms of Jetson series are common to various software tools and SDKs: the software part of Jetson nano development kit is jetpack SDK, including Ubuntu operating system, as well as various libraries for building end-to-end AI applications, such as opencv and visionworks for computer vision and image processing; CUDA, cudnn, tensorrt and various libraries for accelerating AI information. NVIDIA previously called various software acceleration libraries cuda-x software stack in marketing (but it doesn't seem to mention this word much this year).

Among them, the more typical ones that are strongly related to Jetson nano include NVIDIA deepstream for intelligent video stream analysis, Clara for medical imaging, gene and patient monitoring, and Isaac for robots. More ready-made resources include a large number of pre training models reserved by NVIDIA. After making customized model adjustments through transfer learning, they are applied to Jetson nano for information, which also belongs to an important part of this ecology.

We have introduced many of these in our past articles on NVIDIA ecology. I feel that when it comes to this, other boards are weak from the 'Ai ecology' level. It seems that the more efficient ASIC AI dedicated processor is not a thing at the moment.

Give me a few examples

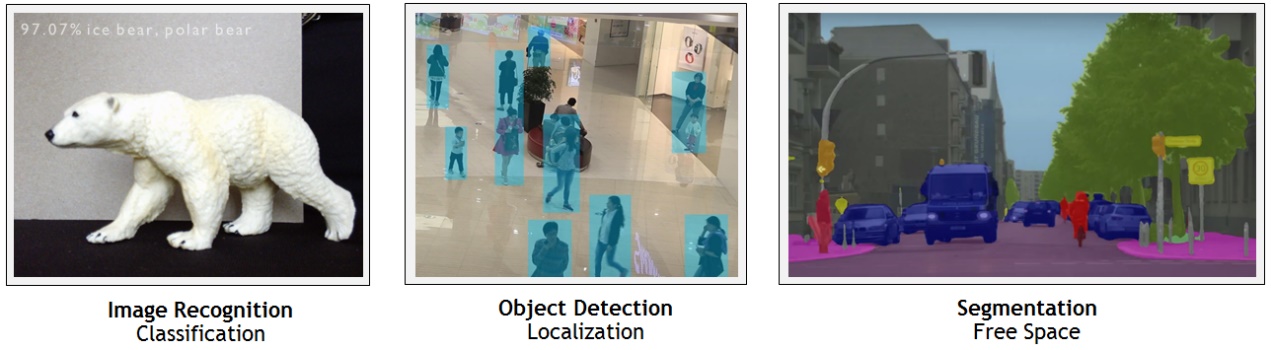

We browsed the official Jetson nano development guide written by NVIDIA, including environment construction, simple use of ready-made libraries, etc; It is found that the main applications of Jetson nano should be object detection, image classification, semantic segmentation and voice processing. More specifically, it may include NVR network video recorder, autonomous car, intelligent speaker, access control system, slam robot, and various embedded aiot applications related to intelligent transportation and smart city.

To vividly represent such development, let's simply give some examples. Similar boards have similar processes in the implementation of many features, but Jetson nano has a broader ecology. The preparation of environment construction is not mentioned. It is a relatively simple process to write image to microSD card. Insert the microSD card into the Jetson nano (4GB / 2GB) card slot, connect the power supply, mouse, keyboard and monitor, and you can start using it. The operating system is Ubuntu 18.04 l4t, 2GB. In order to reduce the system occupation, the desktop is preset as lightweight lxde. It is feasible to learn and develop python, opencv, AI deep learning, ROS automatic control and other applications.

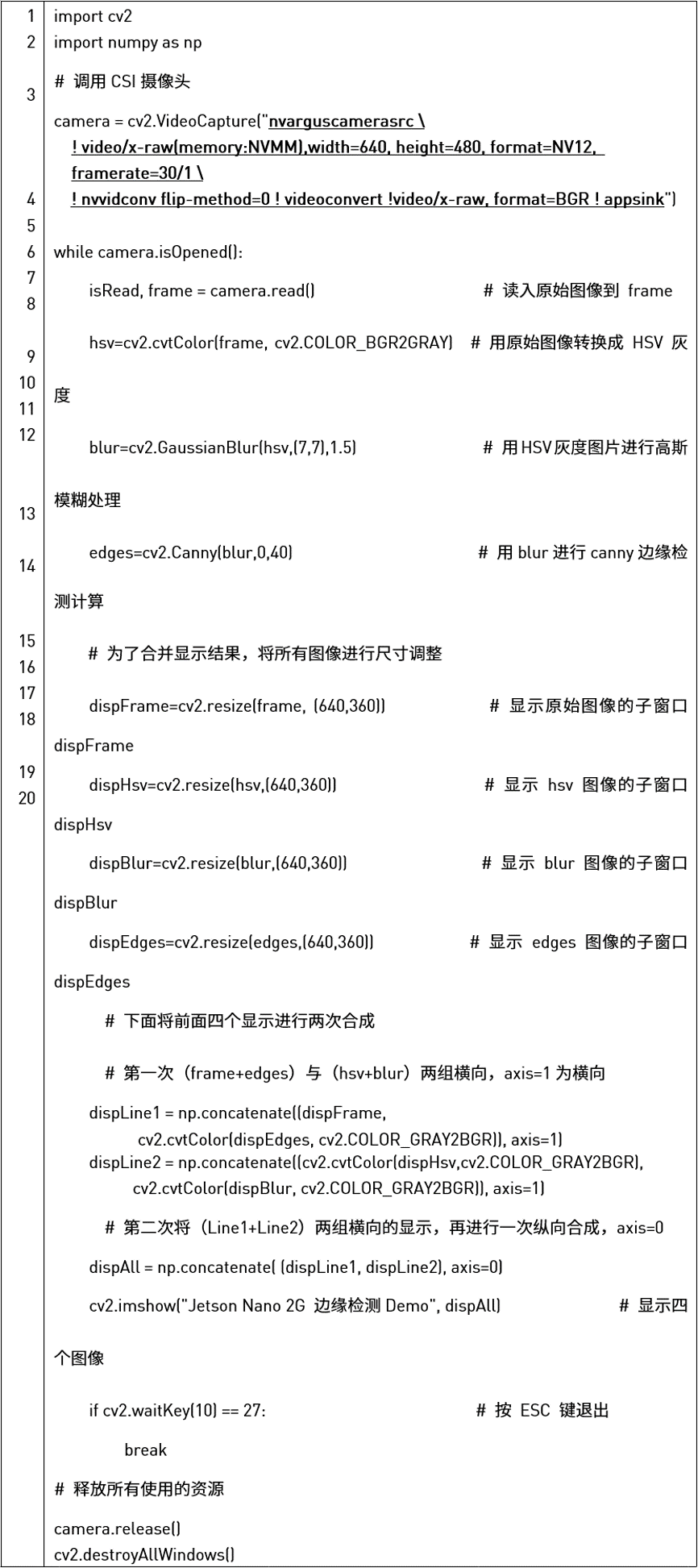

Install the camera for the Jetson nano, including the CSI interface mentioned above, or the USB interface. Jetpack has a built-in opencv development environment. With CSI camera, it can input from the camera to realize some basic machine vision applications; Including enlarging, interpolating and rotating the input image size; More advanced, such as tracking objects with specific colors in the picture, edge detection, face tracking / eye tracking.

Source: NVIDIA NVIDIA enterprise solutions

Taking edge detection as an example, the execution process is roughly to first convert the picture into HSV gray image; Then Gaussian blur is applied to the HSV gray image - this step is to reduce the noise of the image; Finally, find the edge lines for the image. The whole process can call several functions, as shown in the figure above. The implementation process and results are as follows:

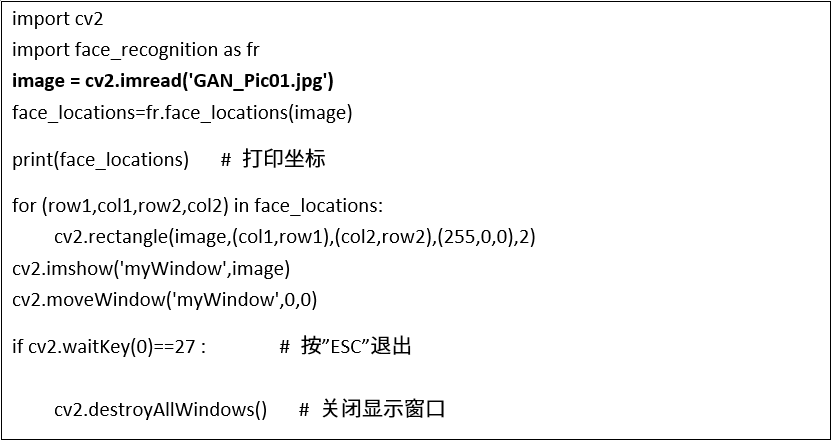

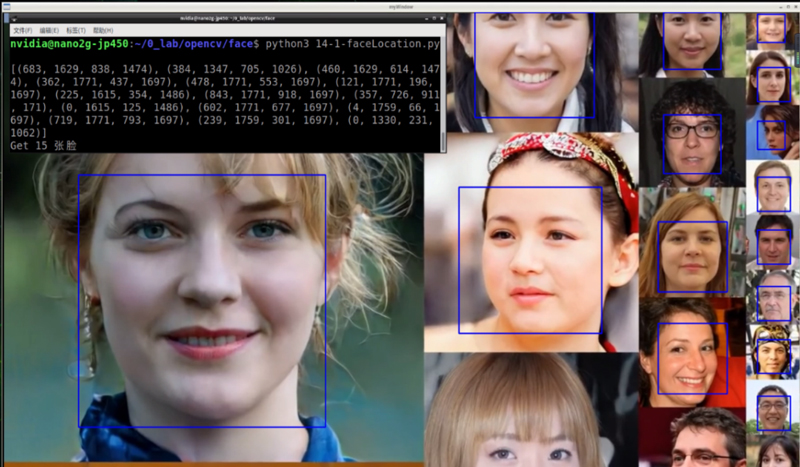

This is a common machine vision application. Let's take a look at the real use of AI applications, using OpenCV library and python 3 development environment to realize face recognition. Companies need such functions as punch in and roll call systems. Use face_ Recognition, a python face recognition library, can be done. It is implemented based on Dlib machine learning open source algorithm library, and the function call is relatively simple.

The face of this library_ The locations method can find the position of the face in the image, draw the frame on the original image by opencv and display the results. The code is shown in the figure below. This example is to locate the face in the picture. You can read the video by setting a while loop.

After face location, you also need to compare with the face feature database to complete identity recognition. It will not be carried out here. Interested students can go to NVIDIA official micro's series of article updates for Jetson nano 2G. The process of starting AI development is still quite light and convenient.

It is worth mentioning that to reflect the performance and ecological advantages, you can actually find some CUDA samples after the jetpack is installed. These examples include ocean simulation experiment oceanfft, smoke particle simulation smokeparticles (256x256 smoke particle motion changes with light and shadow changes), nbody particle collision simulation, etc.

Compared with the execution process, you can use only CPU or GPU parallel ability to accelerate, and you can feel the significant difference in performance. These CUDA examples themselves should best reflect the performance and ecological value of Jetson nano.

It is particularly worth mentioning that NVIDIA has made a hello AI world for the Jetson product line and the composition of experience AI, which is also a part of AI ecology. It claims that developers can feel all kinds of in-depth learning information demos in just a few hours, and use pre training models with jetpack SDK and tensorrt on Jetson nano, Run real-time image classification and object detection. (in addition, NVIDIA's developer blog also lists the use of Jetson nano to run a complete training framework and transfer learning to re train the model. It feels like it's a use. It's estimated that the time will not be very short...)

Hello AI world should be regarded as a tutorial, mainly related to computer vision, camera applications, related image classification, object detection, semantic segmentation, and deep learning nodes for ROS, which integrates recognition, detection and other features with ROS (robot operating system) to achieve an open-source project of robot system and platform. In fact, hello AI world itself can show the comprehensiveness of NVIDIA in ecological layout.

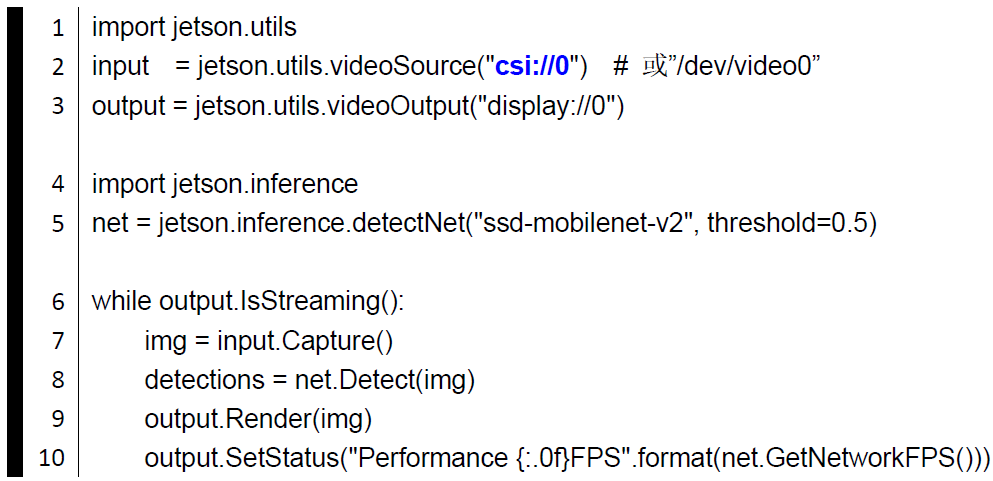

Finally, we can talk about a specific embodiment of NVIDIA's software capability or AI ecological capability: NVIDIA's official micro has given 10 lines of Python code, as shown in the figure above, 'to realize the detection and recognition of 90 kinds of deep learning objects'. It seems that with the hardware resources of Jetson nano 2G, even the excellent yolov4 or SSD mobilenet algorithm can only achieve the performance of 4-6fps.

However, when this Python is executed in the jetpack ecology, the system will generate the corresponding tensorrt acceleration engine for the model. The first line of code here is to import the tool library module, and then establish input and output objects; The fourth line is to import the module of 'deep learning reasoning application', and then establish a net object with detectnet () to deal with the following task of 'physical detection reasoning recognition'.

In the while loop, the seventh line reads a frame of image, and the eighth line of code detects the objects that meet the threshold in the image. Moreover, due to the existence of tensorrt, the performance of this line of code can be improved a lot. Beginners do not need to face the problem of calling tensorrt. The method in the ninth line is to superimpose the data including box, category name and confidence on the image for the objects detected in the picture. In the underlying implementation of NVIDIA, the performance of the original 4-6fps is improved to 10 + FPS. We feel that this example is quite representative.

Taking Jetson nano 2G as an example, this paper briefly discusses that after the embedded development board is added with AI capability, performance and ecology are essential to make the development friendly. Hardware performance is the basic guarantee - more embedded boards begin to have AI computing power bonus is the trend; In the existing development ecology, there are already manufacturers represented by NVIDIA, which greatly reduces the difficulty of development, at least for beginners, and realizes significant optimization in performance and efficiency. (it is also confirmed from the side that NVIDIA may be a software company...)